Test Your Way to Success: How to Unlock Retail Growth With A/B and Holdout Testing

Effective marketing isn’t guesswork.

It’s a systematic study of your customers — who, more than ever, are defined by their “paradoxical buying patterns.” They want control, but expect ease. They love personalization, but worry about privacy. They prefer the simplicity of digital channels, but crave human interactions.

How can you reach complex customers with the right message?

Continual testing and refinement. Instead of relying on assumptions, treat every campaign as a learning opportunity. By experimenting with different messaging, formats, and channels, you gain real-time insights into what resonates with your customers.

With a strong testing approach, you can adapt quickly, fine-tuning your strategy to align with shifting behaviors and preferences. Even when a test doesn’t win, the insights gained are just as valuable — they help you better understand your customers and set your company on the path to incrementality and improved revenue.

Methods for testing your marketing message

Experimentation tools and frameworks serve a clear purpose: to help marketers optimize their strategies by testing, analyzing, and refining messages. Testing tools — which range from manual methods to advanced, AI-driven systems — equip you to gather valuable data on customer behaviors, preferences, and responses.

With a customer-driven approach to experimentation, you can make more informed decisions, reduce guesswork, and continuously improve your marketing efforts. Let’s look at two of the most useful methods for testing your marketing message: A/B testing and holdout groups.

A/B testing

A/B Testing is a method that allows you to compare different elements of a campaign — such as subject lines, product recommendations, and offers — before making data-driven decisions about which version performs best.

Primarily, A/B testing helps you validate your ideas before committing fully to a strategy. Instead of guessing which message will resonate with your audience, A/B testing lets you test in real time, optimizing your efforts based on clear, measurable results.

This process increases conversion rates and fine-tunes your marketing strategy over time, helping you better understand your customers.

Best practices for A/B testing

To get the most out of your A/B tests, consider these best practices:

- Define clear objectives. Before starting any test, make sure you know what you’re measuring and why. Whether your goal is boosting clickthrough rates, improving engagement, or increasing conversions, establishing a clear objective will keep your test focused and actionable.

- Limit your test to 30 days. To maintain relevance and accuracy in your results, it’s important to run A/B tests for a target time period, typically less than 30 days. Extending tests beyond this timeframe often introduces external factors that skew the results.

- Test one variable at a time. A/B testing works best when you isolate a single element, such as a subject line, call to action, or image. Focusing on one variable removes noise from your data so you can accurately attribute differences in performance to a single, specific change.

- Use a large enough sample size. Every test includes an element of random chance and error. To keep these factors from skewing your results, it’s best practice to aim for statistical significance. The exact population size for statistical significance depends on your assumptions, including confidence intervals.

- Learn and improve. As you gather data from one test, use those insights to refine your strategy for future tests, creating a continuous cycle of improvement. This iterative approach enhances your marketing message over time.

Holdout groups

A “holdout group” is a segment of your audience — typically around 10% — that you withhold from a campaign. This action provides a comparison point for testing efforts.

Holdout groups provide a clear way to measure the impact of your campaigns. By withholding a segment of your audience from a campaign, you gain valuable insights into how your marketing efforts influence behavior compared to a control group.

This process helps you assess the effectiveness of your strategies and see the big picture as your marketing impact takes shape over time.

Best practices for holdout groups

To effectively use holdout groups, keep these best practices in mind:

- Randomize your holdout groups. Randomization protects your results from becoming biased or skewed. Build your random segment to represent demographics, behaviors, and engagement levels.

- Limit your group to 90 days. Like A/B testing, it’s important to cap the amount of time you use a single holdout group. Typically, the timeframe is less than 90 days. Beyond that, your results are likely influenced by unknown factors.

- Avoid overlap with other tests. If your holdout group is exposed to other tests or marketing activities, it will muddy the results. Plan your campaigns carefully to isolate the holdout group from other variables that may influence behavior.

- Protect the customer experience. Be mindful of the downstream impact to your holdout audience. Make sure customers in the holdout group still receive value through other channels or initiatives to maintain a positive relationship with your brand.

- Focus on big-picture metrics. Holdout groups are best used to assess overall campaign success. Be sure to track high-level KPIs like customer lifetime value, retention rates, or total revenue lift. These broad metrics give you a clearer picture of the long-term effects of your marketing efforts.

Next steps: How to analyze and iterate

Once your test is complete, it’s time to analyze the results and improve your marketing strategy.

First, gather your data and review it carefully. Focus on key metrics like conversion rates, clickthrough rates, and customer engagement. This sets the foundation for a deeper look.

Second, analyze your results to make sure they’re statistically significant. This means determining if the performance difference between test groups is meaningful enough to support action or if it could be a result of random chance.

Third, assess the insights you gained from the test and how they can be applied to future campaigns. This involves identifying what worked well, what didn’t, and how your customers responded to different elements of the campaign. Look beyond surface-level metrics to understand the underlying reasons for performance.

Finally, take the learnings from your test and put them to work. This may mean applying successful elements to other areas of your marketing or revising aspects of the campaign that underperformed. Testing should be a continuous process, with each experiment building on the lessons of the previous one.

How most marketers test (and how they should)

While up to 77% of companies use website A/B testing, the testing process hasn’t evolved much in recent years. Most marketers still rely on traditional methods like spreadsheets and manual tracking to manage their testing programs.

Manual testing introduces multiple downsides.

For starters, it’s incredibly time-consuming. Marketers have to determine testing groups, calculate statistical significance, juggle incoming responses, and collate results. Worse, the time commitment only grows as you scale.

Human error is also an issue. When running tests by hand, marketers can make all-too-easy mistakes at every stage of the testing process. And once an error is introduced, it’s hard to unwind, costing your team valuable time and effort. Additionally, manual test results are often painfully slow. As the marketing team works to organize and analyze incoming data, your company may continue operating with outdated information. The result: missed key opportunities to pivot or scale winning strategies in real time.

As a result, many teams find that only 1 in 8 tests yield valuable results. The rest are limited by a lack of statistical significance, muddled results, and slow turnaround times.

Here’s the good news: With the right technology, you can experiment faster, more accurately, and scale at will.

At its most basic, built-in automation eliminates time-consuming tasks like calculating statistical significance and managing multiple data streams. This allows you and your team to focus less on logistics and more on testing strategy.

Tech-enabled experimentation also provides real-time feedback, helping you quickly pivot and adapt to evolving customer behaviors — instead of waiting weeks for results. This accelerates decision-making and improves the accuracy of your tests by reducing human error and locking in consistency across campaigns.

Finally, advanced experimentation tools give you a holistic, unified view of your data. With all tests centralized in one platform, you gain a deeper look at cross-channel performance and customer journeys — miles beyond the fragmented, manual methods most marketers use today.

Meet Bluecore’s Experimentation Hub

At Bluecore, we’re focused on empowering retailers to discover their best customers and keep them for life. That’s why we’ve launched a feature-rich Experimentation Hub.

Shoppers expect more personalized experiences, and the competition to capture attention is fierce. To stay ahead, retailers need to move beyond outdated testing methods and take advantage of a modern, data-driven approach.

An extension of our customer analytics product, Bluecore’s Experimentation Hub equips retailers to make faster, smarter decisions, powered by real-time insights and automation. Rather than focusing solely on the standard campaign and channel-level tests, retailers can now test their marketing against larger customer-centric goals such as increasing first-time buyer conversions, average order value, or purchase frequency.

Testing their campaigns across critical audiences — including new buyers, and retained and reactivated customers — they can identify and prioritize the strategies that drive growth.

Centralized hub

Many teams don’t have time to manage multiple experiments across different channels and customer journeys. This leads to testing small changes within channel execution, which don’t necessarily translate to a real impact. Multiple small tests running concurrently also a

With a centralized hub, you can bring everything into one streamlined view, including a centralized view of all A/B and holdout tests.

This allows your team to easily oversee test and learn programs, locking in consistency and accuracy at every stage. The Experimentation Hub automates the entire testing process, from setup to execution, reducing manual effort and saving valuable hours. By centralizing experimentation, you make data-driven decision-making faster and more efficient.

Automated results reporting

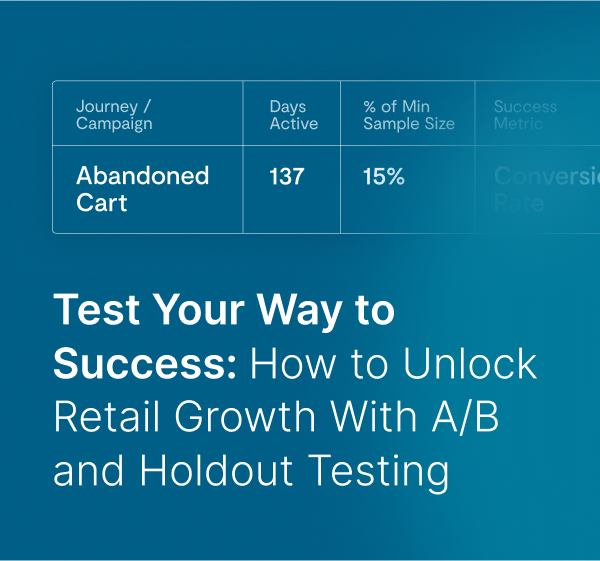

Say goodbye to manual number crunching and delayed insights. With automated results, you can access real-time updates on critical metrics such as statistical significance, success rates, and the age and velocity of each test.

The Experimentation Hub tracks test performance in real time, flagging moments that need your attention. You can also use filters within the Hub to manage and organize your tests — that way, you’re always focusing on the most relevant data.

This automation protects your accuracy and eliminates human error. It also provides actionable insights at a glance, enabling faster, more informed decisions.

Scale and compound learnings

Successful tests shouldn’t stop at a single win. With the built-in ability to scale and compound your learnings, you can take what works and apply it across broader audiences, campaigns, or channels.

Our Experimentation Hub enables you to easily replicate winning strategies. Maximize the impact of your tests and keep your marketing efforts on an upward trajectory.

If you’re a Bluecore customer, reach out to your Customer Success Manager to explore how you can use Experimentation Hub to test the information you need faster. If you’re new to Bluecore, schedule time to chat with our team about the Experimentation Hub.